- Rumors of Apple partnering with external GenAI providers highlight potential nuanced AI strategy to meet the needs of disparate markets.

- Regulatory compliance, local needs are drivers for possible Baidu tie-up in China.

- Apple’s global GenAI strategy – the how and why of in-house vs Gemini.

Generative Artificial Intelligence (GenAI) has emerged as a focal point of innovation within the smartphone industry, captivating the attention of Original Equipment Manufacturers (OEMs) worldwide. Companies such as Samsung, HONOR, and Google have made significant strides by introducing smartphones powered by GenAI technology, reshaping the landscape of mobile devices. Notably, within the Android ecosystem, GenAI integration has taken center stage, positioning it as a frontrunner in leveraging AI capabilities.

In contrast, Apple has been perceived as lagging in this domain, prompting recent discussions of potential collaborations with tech giants like Google and Baidu to integrate their advanced GenAI solutions into the Apple ecosystem. Anticipation is building as rumors suggest that Apple may unveil details of these strategic partnerships at the upcoming Worldwide Developers Conference (WWDC) scheduled for June, signaling a potential shift in the dynamics of AI adoption in the smartphone market.

Bloomberg and the New York Times recently published articles about Apple’s potential deal with Google. Chinese media also carried news about Apple being in talks with Baidu to introduce GenAI technologies in the iPhone.

Here is our take on the contours of Apple’s potential partnership with Baidu and Google:

Apple With Baidu, All for Compliance?

Regulatory Compliance

In the dynamic landscape of Chinese technology regulations, Apple’s pursuit of integrating GenAI into its ecosystem necessitates collaboration with a local partner. Additionally, a tie-up with a Chinese partner will also enable Apple to bring local knowledge to its offering.

The stringent regulatory framework in China – in particular, those around the collection of sensitive public data – makes it highly improbable for AI models developed by Western entities to receive the necessary approvals. AI models of Chinese origin are more favored. This regulatory environment compels Apple to forge new alliances within the country, with Baidu emerging as a potential partner.

Localized AI Solutions

China’s top Internet search engine provider Baidu’s GenAI model is one of 40 models approved by Chinese regulators, with the most famous being the Ernie Chatbot.

Baidu claims its latest version, the Ernie Bot 4.0, outperforms GPT-4 in Chinese, leveraging the world’s largest Chinese language corpus for training.

It excels in grasping Chinese linguistic subtleties, traditions, and history, and can compose acrostic poems, areas that ChatGPT may struggle with.

Although nothing has been officially confirmed, should negotiations between Apple and Baidu prove fruitful, Baidu’s progress in LLM on the mobile device would see strong acceleration, particularly in system optimization, given that about one in five Chinese smartphone users own an iPhone.

Apple might also consider partnering with other GenAI providers in China, like Zhipu AI and its prominent GLM-130B model, or Moonshot AI with its Kimi Chatbot, notable for processing up to 2 million Chinese characters per prompt.

Despite Apple’s choice, embedding a Chinese LLM model into the forthcoming iOS 18 seems improbable. Apple will not let any third-party model weaken its influence on the iOS ecosystem. Consequently, Apple might seek a more collaborative partnership with Baidu with inferencing largely restricted to the cloud. This evolving partnership will showcase Apple’s flexibility and strategic maneuvering within China’s regulatory framework, marking a new era in its AI ventures in the Chinese market.

Apple and Google, Friend or Foe?

Apple is also rumored to be considering a global collaboration with Google to integrate the Gemini AI model on iPhones.

Catching up in AI

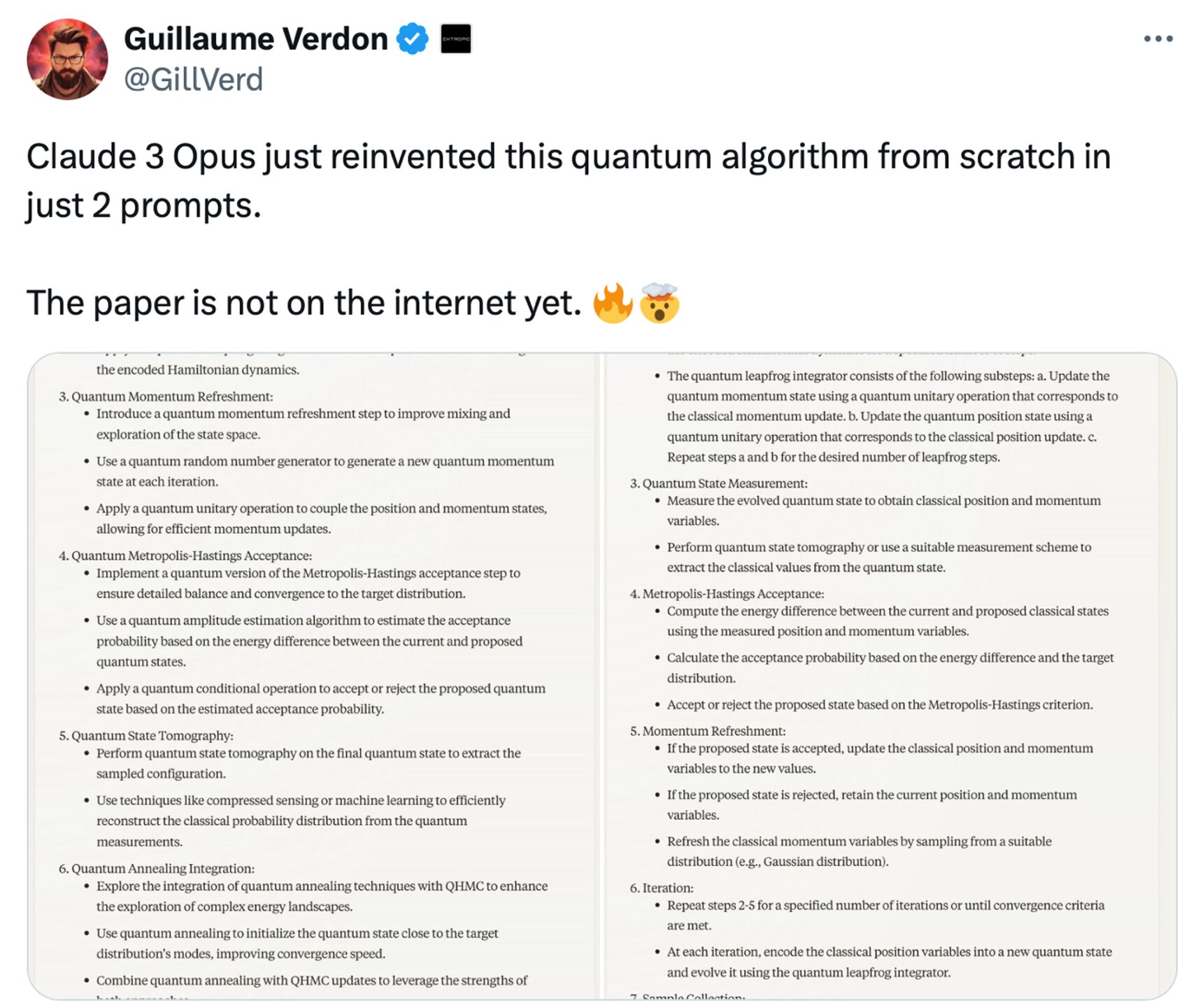

Gemini is a suite of large language models developed by Google, renowned for its robust capabilities and extensive applications. While Apple is actively developing its own AI models, including a large language model codenamed Ajax and a basic chatbot named Apple GPT, its technology is unproven.

This makes collaboration with Google a more prudent choice for now. The potential use of Google’s Gemini AI would underscore an effort from Apple to incorporate sophisticated AI functionalities into its devices, beyond its in-house capabilities.

External AI integration: While Apple may use its own models for some on-device features in the upcoming iOS 18 update, partnering with an external provider like Google for GenAI use cases (such as image creation and writing assistance) could indicate a significant shift towards enhancing user experiences with third-party AI technologies.

Apple might follow the strategy of Chinese OEMs like OPPO, vivo, HONOR and Xiaomi who all have in-house LLMs that have been deployed into their latest flagship models. Meanwhile, Apple could work with third parties like Baichuan, Baidu and Alibaba to leverage a broader GenAI ecosystem. With this strategy, Chinese OEMs have full control of the local big model and resulting changes in hardware and software closely relating to smartphone design, and they can continue to make a profit by simultaneously owning an App store and AI agent. If Apple were to follow this strategy for international markets, it may seek alliances not only with Google but also with OpenAI and Anthropic. This approach would mean that Apple will not have to open iOS to third-party LLMs.

If Apple were indeed to incorporate Gemini into its new operating system to offer users an enhanced, more intelligent experience, these advancements would primarily be focused on-device, rather than on cloud-based features. This means Google’s Gemini Nano, the most lightweight LLM, is a preferred choice, as it has also been optimized and embedded in Google’s Pixel 8 Smartphone.

Implications of Apple’s Potential LLM Partnerships

In China, a potential partnership with Baidu would ensure Apple’s offerings are both culturally attuned and compliant with local regulations, while the global collaboration with Google promises to inject a new level of intelligence into the iPhone’s capabilities, thus redefining the essence of mobile user experience for Apple.

These types of partnerships would highlight a nuanced approach to AI integration, tailored to meet the distinct needs of different markets.

Related Posts

However, the mobile application’s audio experience fell short of expectations. High latency detracted from the smoothness anticipated from OpenAI’s demo event. Despite significant lag in translating from English to Italian, the quality of translation remained exceptional, demonstrating the model’s linguistic prowess.

However, the mobile application’s audio experience fell short of expectations. High latency detracted from the smoothness anticipated from OpenAI’s demo event. Despite significant lag in translating from English to Italian, the quality of translation remained exceptional, demonstrating the model’s linguistic prowess.